In 2025, the AI coding assistant landscape is more competitive than ever. Two giants are battling for developer mindshare: OpenAI’s GPT-5 and Anthropic’s Claude 4.1. Both promise smarter code generation, better reasoning, and tighter integration into your workflow.

For software engineers like me, the question is no longer whether to use AI for coding — it’s which one to trust with your work. In this post, we’ll look at how GPT-5 and Claude 4.1 perform specifically for coding, from benchmarks and reasoning to cost and real-world developer feedback.

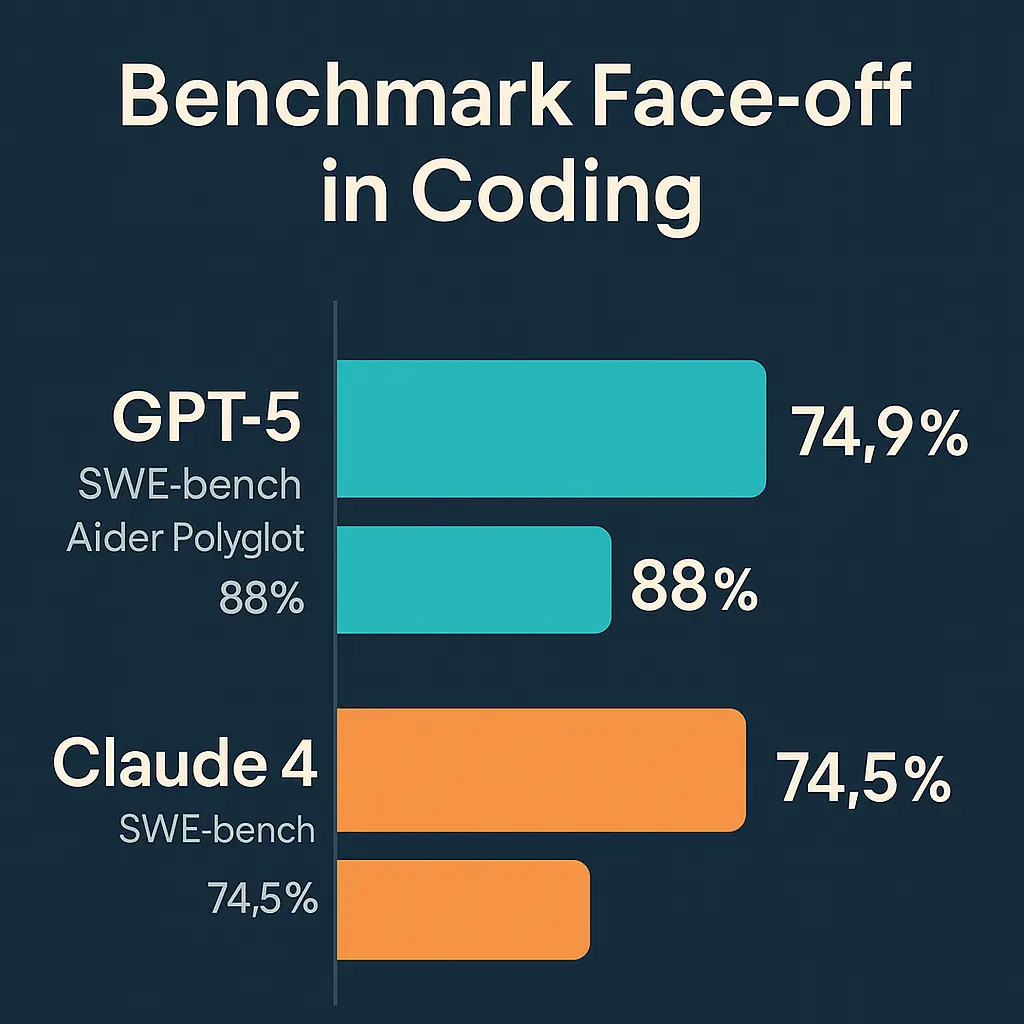

Benchmark Face-off in Coding

When it comes to raw coding benchmarks, the numbers are close, almost too close.

GPT-5 has shown:

- SWE-bench score: 74.9% (up from GPT-4.1’s ~54.6%)

- Aider Polyglot score: 88%

Claude 4.1 is right there with:

- SWE-bench score: 74.5%

While benchmarks show a statistical tie, developers on forums note subtle differences. GPT-5 tends to excel at quick code generation and bug fixing. Claude 4.1 shines with deeply contextual and multi-file coding tasks, especially in large projects.

Context Windows, Reasoning & Memory

For complex coding, context size and reasoning are critical.

- GPT-5 supports up to 272K tokens, allowing you to feed in entire repositories, long documentation, or extended conversations. It also benefits from faster reasoning steps and improved multimodal capabilities for developers who work with visual assets alongside code.

- Claude 4.1 offers extended reasoning that can sustain “hours-long” tasks. Developers praise its ability to keep track of large codebases without losing variable definitions, architecture details, or prior decisions.

In practice, GPT-5 can handle massive inputs, but Claude 4.1 has a knack for keeping the logic clean over long sessions without hallucinating.

Tooling & IDE Integration

AI coding agents are only as good as the tools they integrate with.

- GPT-5 slots directly into tools like Cursor, GitHub Copilot, and JetBrains IDEs. Developers report it’s particularly good at catching bugs and suggesting improvements mid-flow without interrupting your work.

- Claude 4.1 powers Claude Code, Anthropic’s own coding environment, and integrates with popular developer platforms. Its “agentic” features allow it to proactively propose changes, refactor code, and coordinate multi-step builds.

If your workflow is heavy on GitHub and Copilot, GPT-5 might feel like a natural fit. If you want a self-directed AI that’s good at handling larger tasks autonomously, Claude 4.1 has the edge.

Cost & Efficiency

AI costs are no longer a trivial consideration for serious development work.

- GPT-5 comes in multiple tiers – Mini, Nano, and Pro giving flexibility based on task complexity. This makes it attractive for cost-sensitive teams that still want access to top-tier performance for occasional big jobs.

- Claude 4.1 charges around $15/million input tokens and $75/million output tokens, with discounts for caching and batching. Anthropic has also introduced usage limits to prevent “inference whales” from consuming excessive compute resources.

For heavy, repetitive coding tasks, Claude’s caching discounts can save a lot. For ad-hoc requests or mixed workloads, GPT-5’s tiered structure can be more economical.

Developer Experience & Feedback

I scoured developer communities for firsthand impressions.

What people like about GPT-5:

- Faster at generating initial drafts of code

- Good at producing front-end UI elements quickly

- Feels responsive and “in the flow” in interactive coding sessions

What people like about Claude 4.1:

- Stronger at long-context, multi-file reasoning

- Less prone to hallucinations in large projects

- More consistent with style and architecture decisions

One recurring note: GPT-5 sometimes mismanages variable names in very large contexts, whereas Claude 4.1 stays steady. On the flip side, Claude 4.1 can occasionally overthink simple problems, leading to verbose solutions.

Best Use Cases for Each

Based on the data and feedback, here’s where each model shines:

| Use Case | GPT-5 | Claude 4.1 |

|---|---|---|

| Quick UI component generation | ✅ | |

| Large-scale refactoring | ✅ | |

| Cost-sensitive small tasks | ✅ | |

| Multi-hour reasoning sessions | ✅ | |

| Balanced workload with bursts of big jobs | ✅ | |

| Consistent architecture over time | ✅ |

My Personal Take

In my own experience, I really enjoyed working with Claude 4. Its ability to keep track of long coding sessions, maintain structure, and deliver consistent results has been a huge productivity boost. Moving to Claude 4.1 feels natural for me.

That said, GPT-5’s speed and knack for quick solutions make it hard to ignore. I’ll keep exploring GPT-5, especially for rapid prototyping and smaller coding tasks where its responsiveness can shave minutes off my workflow.

You can also explore Top 10 AI Tools Every Developer Should Know in 2025 to see how other AI tools complement GPT-5 and Claude 4.1 in your coding workflow.

FAQ

Q: Is GPT-5 or Claude 4.1 better for large codebases?

A: Claude 4.1 generally handles extended contexts with more stability, making it a safer choice for big projects.

Q: Which one is more cost-efficient for developers?

A: GPT-5’s tiered plans suit variable workloads, while Claude 4.1’s caching discounts benefit heavy, repetitive usage.

Q: Can I use both in the same workflow?

A: Absolutely. Many developers use GPT-5 for rapid drafts and Claude 4.1 for refinement and long-context work.

If you want to speed up your coding without losing control, both GPT-5 and Claude 4.1 are worth a place in your toolkit. For me, the balance leans slightly towards Claude 4.1 — but the story’s far from over.